AI-based assistants improve the user experience and reduce support costs. However, their implementation involves a lot of repetitive technical tasks, such as connecting to different LLMs or implementing tool calling.

Hashbrown takes this work off our hands. The open-source project, supported by two well-known experts in the Angular community, supports all relevant model providers, including Gemini (Google), GPT (OpenAI), Azure (Microsoft), and Llama (Meta).

This article shows how to extend an existing Angular application with a chat assistant using Hashbrown.

📂 Source Code (🔀 see branch hashbrown)

Example Application

The example application used here is the flight search, which I use to demonstrate numerous Angular features. It provides a chat window, which can be displayed on the right:

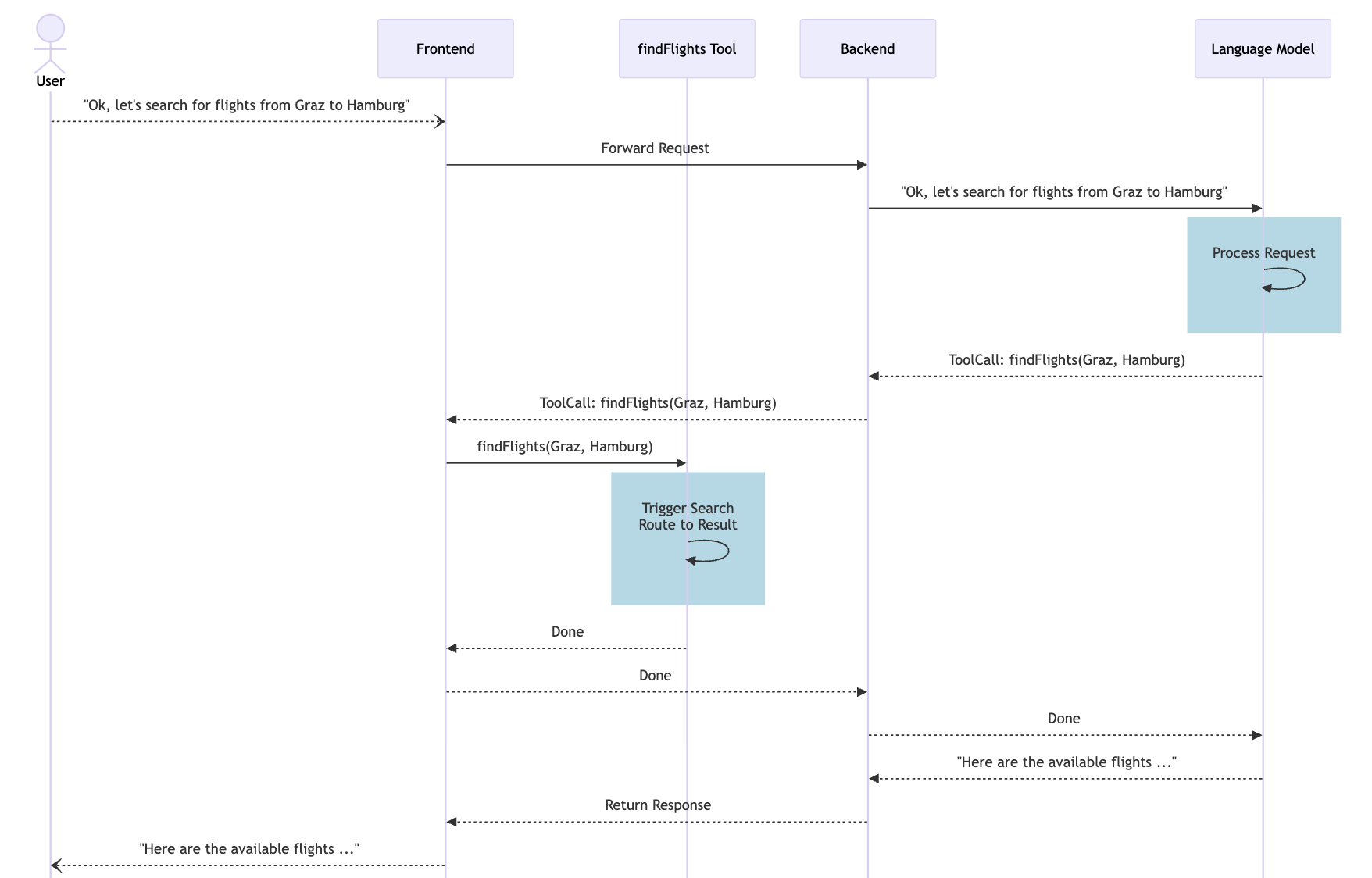

As the chat history in the image shows, the assistant can request additional data and trigger actions within the application when needed. This is made possible by tool calling: The LLM instructs the app to perform a specific function and send back the results.

These tool calls appear in the chat history as requested by the LLM, without outputting the parameters {from: 'Graz', to: 'Hamburg'} for findFlights.

While chat messages like "Tool Call: findFlights" inform us developers about internal processes, such information is likely to be confusing for end users. Therefore, it would make sense to translate this technical information into something like "Loading flights from Graz to Hamburg".

Setting up Hashbrown

To use Hashbrown, we need a few npm packages:

npm install @hashbrownai/{core,angular,google}The package @hashbrown/angular includes an Angular-based API for the framework-agnostic core library. A framework binding for React is also available. The package @hashbrown/google provides access to Google's Gemini models. Hashbrown offers additional packages for other model families out of the box (e.g., @hashbrown/openai).

For programmatic access to LLMs, the application requires an API key, which is usually linked to a paid license. However, Google's Gemini offers a comprehensive free package for testing. An API key can be generated in Google AI Studio with just a few clicks.

To prevent the API key from being published to the web, it must not be used directly in the Angular frontend. Instead, a very lean backend is used, which acts as an intermediary between the frontend and LLM:

// Taken from hasbrown.dev and adjusted for our example

import express from 'express';

import cors from 'cors';

import { Chat } from '@hashbrownai/core';

import { HashbrownGoogle } from '@hashbrownai/google';

const host = process.env['HOST'] ?? 'localhost';

const port = process.env['PORT'] ? Number(process.env['PORT']) : 3000;

const GOOGLE_API_KEY = process.env['GOOGLE_API_KEY'];

if (!GOOGLE_API_KEY) {

throw new Error('GOOGLE_API_KEY is not set');

}

const app = express();

app.use(cors());

app.use(express.json());

app.post('/api/chat', async (req, res) => {

const completionParams = req.body as Chat.Api.CompletionCreateParams;

const response = HashbrownGoogle.stream.text({

apiKey: GOOGLE_API_KEY,

request: completionParams,

transformRequestOptions: (options) => {

options.model = 'gemini-2.5-flash';

options.config = options.config || {};

options.config.systemInstruction = `

You are Flight42, an UI assistent that helps passengers with finding

flights.

- Voice: clear, helpful, and respectful.

- Audience: passengers who want to find flights or have questions about

booked flights.

Rules:

- Only search for flights via the configured tools

- Never use additional web resources for answering requests

- Do not propose search filters that are not covered by the provided tools

- Do not propose any further actions

- Provide enumerations as markdown lists

`;

return options;

},

});

res.header('Content-Type', 'application/octet-stream');

for await (const chunk of response) {

res.write(chunk);

}

res.end();

});

app.listen(port, host, () => {

console.log([ ready ] http://${host}:${port});

});The implementation of this backend, which is partly based on the Hashbrown documentation, expects the API key to be in the environment variable GOOGLE_API_KEY. On macOS and Linux, this can be achieved with

export GOOGLE_API_KEY=abcde…Under Windows you use

set GOOGLE_API_KEY=abcde…The transformRequestOptions function allows the backend to supplement or override the options set by the frontend - an important mechanism because these settings have direct cost implications. In this example, the backend enforces the cost-effective, all-around model gemini-2.5-flash and defines a system instruction that strictly limits the model to flight searches. This prevents users from consuming expensive LLM resources for requests that are out of scope.

Before being overwritten, the original values from the frontend are stored in model and systemInstructions. This allows for controlled negotiation: At the user's request, the server could, in selected cases, switch to a more powerful (but also more expensive) model or adjust the system instructions.

The URL of this minimal server is configured at the startup of the Angular application via provideHashbrown:

import { provideHashbrown } from '@hashbrownai/angular';

[…]

bootstrapApplication(AppComponent, {

providers: [

provideHttpClient(),

[…]

provideHashbrown({

baseUrl: 'http://localhost:3000/api/chat',

middleware: [

(req) => {

console.log('[Hashbrown Request]', req);

return req;

}

]

}),

],

});The optional middleware defined here logs all requests to the server in the JavaScript console. These messages give us a better understanding of how such systems work and also help with troubleshooting.

A Chat With the LLM of Your Choice

Hashbrown provides several implementations of Angular's Resource API for chatting with LLMs. For our purposes, we use the chatResource:

@Component({ … })

export class AssistantChatComponent {

[…]

message = signal('');

chat = chatResource({

model: 'gemini-2.5-flash',

system: `

You are Flight42, an UI assistent that helps passengers with

finding flights.

[…]

`,

tools: [

findFlightsTool,

toggleFlightSelection,

showBookedFlights,

getBookedFlights,

[…]

],

});

submit() {

const message = this.message();

this.message.set('');

this.chat.sendMessage({ role: 'user', content: message });

}

[…]

}The chatResource provides the stateless LLM with the complete chat history on every request. This allows the model to reference previous messages. For example, if a conversation revolves around flight #4711, the LLM recognizes what is meant by "this flight".

Furthermore, the chatResource supports tool calling: The model receives all functionalities provided by the frontend via the tools property, e.g., findFlights for flight searches, and can request their invocation. The technical implementation of such tools is discussed in the next section.

The value of the chatResource contains the chat history to be displayed:

@for (message of chat.value(); track $index) {

<article class="msg assistant">

<div class="avatar">{{ icons[message.role] }}</div>

<div>

<div class="bubble">

{{ message.content }}

@if (message.role === 'assistant') {

@for(toolCall of message.toolCalls; track toolCall.toolCallId) {

<div [title]="toolCall.args | json">

Tool Call: {{ toolCall.name }}

</div>

}

}

</div>

</div>

</article>

}The role attribute indicates the sender of each chat message. For example, the value assistant identifies messages from the LLM, while user indicates the frontend user. Messages from the LLM can also contain requests for tool calls, which the template also presents. Each tool call refers to the name of the desired tool (e.g., findFlights) and the arguments to be passed (e.g., {from: 'Graz', to: 'Hamburg'}).

More on this: Angular Architecture Workshop (Remote, Interactive, Advanced)

Become an expert for enterprise-scale and maintainable Angular applications with our Angular Architecture workshop!

English Version | German Version

Providing Tools

The provided tools are objects that the application creates using the createTool function:

import { createTool } from '@hashbrownai/angular';

import { s } from '@hashbrownai/core';

[…]

export const findFlightsTool = createTool({

name: 'findFlights',

description: `

Searches for flights and redirects the user to the result page where

the found flights are shown.

Remarks:

- For the search parameters, airport codes are NOT used but the city

name. First letter in upper case.

`,

schema: s.object('search parameters for flights', {

from: s.string('airport of departure'),

to: s.string('airport of destination'),

}),

handler: async (input) => {

const store = inject(FlightBookingStore);

const router = inject(Router);

store.updateFilter({

from: input.from,

to: input.to,

});

router.navigate(['/flight-booking/flight-search']);

},

});The tool name must be unique and comply with the model's specifications. A good rule of thumb: anything that's valid as a variable name in TypeScript should also work here. The LLM uses the description to decide whether the tool is relevant to the current task.

The schema defines the arguments that the model must pass - in this example, an object with the search parameters from and to. Here, too, the LLM uses the textual descriptions as a guide.

For this, Hashbrown provides its own schema language, Skillet. It is reminiscent of libraries such as Zod but is reduced to constructs that are reliably supported by LLMs. Future versions of Hashbrown are planned to additionally support JSON Schema and enable a bridge to existing schema libraries (e.g., Zod).

The handler implements the tool: It receives the object defined in the schema and delegates the task to the system logic, such as the store or the router. Handlers can also return values to the model, like the getLoadedFlights tool:

export const getLoadedFlights = createTool({

name: 'getLoadedFlights',

description: Returns the currently loaded/ displayed flights,

handler: () => {

const store = inject(FlightBookingStore);

return Promise.resolve(store.flightsValue());

},

});Hashbrown does not use Skillet to formally describe the return value - the model accepts any form of response. If the frontend wants to provide the model with information about the structure of the delivered result, this can be done as free text in the description property.

Under the Hood

A look at the messages sent to the LLM shows how the tool calling works:

{

"model": "gpt-5-chat-latest",

"system": "You are Flight42, an UI assistent [...]",

"messages": [

[...],

{

"role": "user",

"content": "Ok, let's search for flights from Graz to Hamburg."

},

{

"role": "assistant",

"content": "",

"toolCalls": [

{

"id": "call_AeFJ3xsnNw29EoQVo7hR9Qtu",

"index": 0,

"type": "function",

"function": {

"name": "findFlights",

"arguments": "{\"from\":\"Graz\",\"to\":\"Hamburg\"}"

}

}

]

},

{

"role": "tool",

"content": {

"status": "fulfilled"

},

"toolCallId": "call_AeFJ3xsnNw29EoQVo7hR9Qtu",

"toolName": "findFlights"

},

{

"role": "assistant",

"content": "Here are the available flights [...]",

"toolCalls": []

}

],

"tools": [

{

"description": "Searches for flights [...]",

"name": "findFlights",

"parameters": {

"$schema": "http://json-schema.org/draft-07/schema#",

"type": "object",

"properties": {

"from": {

"type": "string",

"description": "airport of departure"

},

"to": {

"type": "string",

"description": "airport of destination"

}

},

"required": [

"from",

"to"

],

"additionalProperties": false,

"description": "search parameters for flights"

}

},

[...]

]

}These messages mirror the following chat history:

- Hashbrown sends the text-based search query of the user in the role of

userto the model. - The model responds in the role of

assistantwith a tool call. This includes specifying the name of the tool and the arguments to be passed. - Hashbrown then triggers the tool that handles the search and route changes.

- Hashbrown, in the

toolrole, reports the completion of the tool call. If the tool had returned a result, Hashbrown would include it in this message. - The model responds in the role of

assistant.

To ensure the LLM is aware of the available tools, these, along with their metadata, are transferred in the tools section at the end. This section contains the textual descriptions embedded in the source code as well as the schema definitions of the expected arguments.

For better illustration, the following figure depicts the process as a sequence diagram. This diagram also shows the backend, which allows the frontend access to the model:

Conclusion

Hashbrown makes it easy to extend frontend applications with chat-based AI assistants. It handles complex tasks such as LLM integration and tool calling, allowing developers to focus on the core business value. In just a few steps, you can create an assistant that controls user interactions, triggers application functions, and responds contextually.

In practice, it's essential to consider that LLMs are not deterministic - the same query can therefore lead to slightly different results. Furthermore, it's worthwhile to refine the tool descriptions step by step and test them with typical example queries to achieve reliable interaction between the model and the application.